NVIDIA DGX A100 sets the stage for this enthralling narrative, offering readers a glimpse into a world where computational power and artificial intelligence converge. The DGX A100, a powerful supercomputer designed for demanding AI and high-performance computing (HPC) workloads, stands as a testament to NVIDIA’s commitment to pushing the boundaries of what’s possible in these fields.

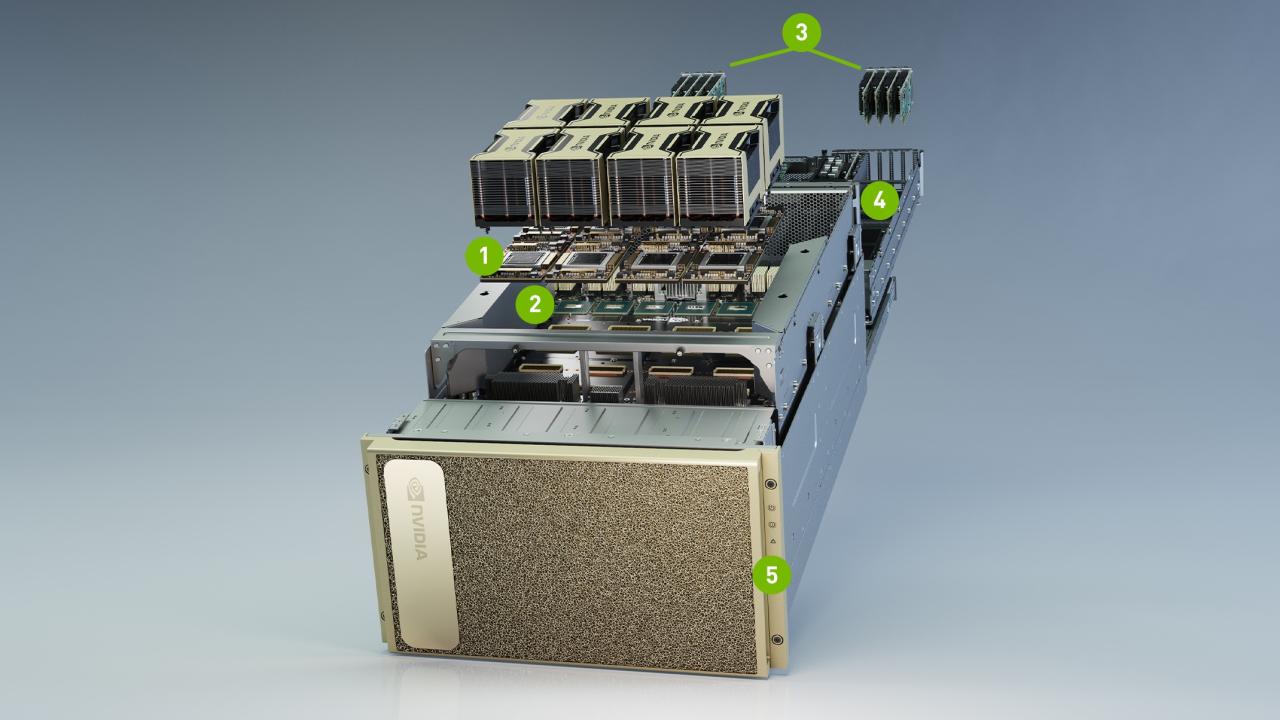

At its core, the DGX A100 system is built around the NVIDIA A100 Tensor Core GPU, a groundbreaking chip that boasts exceptional performance for deep learning, scientific computing, and other data-intensive applications. This system is designed to handle massive datasets, train complex models, and accelerate research and development in various industries.

Challenges and Limitations

While the DGX A100 offers impressive performance and capabilities, it also presents certain challenges and limitations that users should be aware of. These aspects can influence the deployment, utilization, and overall effectiveness of the system.

Cost and Complexity of Deployment, Nvidia dgx a100

Deploying and maintaining a DGX A100 system involves significant costs and complexities. The initial purchase price of the system is substantial, and ongoing operational expenses, such as power consumption, cooling, and maintenance, can be considerable.

- The DGX A100 system itself is a significant investment, with the price tag exceeding $100,000 for a standard configuration. This cost can be a major barrier for many organizations, particularly smaller companies or research groups with limited budgets.

- The system’s high power consumption requires specialized infrastructure, such as high-capacity power supplies and cooling systems, which can add further costs to the deployment process.

- Maintaining a DGX A100 system requires specialized technical expertise, which can be difficult to find and expensive to hire. This includes managing the system’s software and hardware, ensuring optimal performance, and troubleshooting any issues that may arise.

Scalability and Interoperability

While the DGX A100 offers impressive computing power, its scalability and interoperability with other systems can present challenges.

- Scaling the system to meet the needs of increasingly complex workloads can be challenging, as it requires careful planning and coordination to ensure optimal performance and resource utilization.

- Integrating the DGX A100 system with existing infrastructure and workflows can be complex, requiring careful consideration of compatibility issues and potential bottlenecks.

- The DGX A100 system is designed for specific use cases, such as deep learning and high-performance computing. It may not be suitable for all applications, and its specialized nature can limit its versatility.

Software and Application Support

The DGX A100 system relies heavily on specialized software and applications, which can present challenges in terms of availability, compatibility, and support.

- The DGX A100 system is optimized for NVIDIA’s software stack, including CUDA and cuDNN. While this provides excellent performance, it can limit the availability of alternative software options.

- The DGX A100 system requires specific software configurations and updates to maintain optimal performance and security. Managing these updates and ensuring compatibility with other systems can be a complex process.

- The DGX A100 system is a relatively new platform, and the availability of software support and documentation may be limited compared to more established systems.

Best Practices and Optimization Techniques

The DGX A100 is a powerful system, but maximizing its performance requires careful attention to best practices and optimization techniques. This section will discuss various methods for tuning applications and workflows to achieve optimal efficiency and scalability on the DGX A100.

Application Tuning

Optimizing applications for the DGX A100 involves various techniques, including:

- Code Profiling: Understanding the performance bottlenecks in your application is crucial. Profiling tools like NVIDIA Nsight Systems can help identify areas for improvement.

- Memory Optimization: Reduce memory usage by using data structures efficiently and minimizing unnecessary copies. Employ techniques like zero-copy data transfers and memory pre-allocation.

- Parallelism and Concurrency: Leverage the DGX A100’s multi-GPU architecture by parallelizing your code using libraries like CUDA and cuDNN.

- Hardware Acceleration: Utilize hardware acceleration libraries like cuBLAS, cuFFT, and cuRAND for optimized linear algebra, Fast Fourier Transforms, and random number generation, respectively.

Workflow Optimization

Optimizing the overall workflow on the DGX A100 involves:

- Data Preprocessing: Optimize data loading and preprocessing to minimize bottlenecks. Consider using techniques like data augmentation and parallel data loading.

- Model Training and Inference: Optimize training and inference pipelines by adjusting hyperparameters, using techniques like early stopping, and employing efficient inference methods.

- Resource Management: Manage resources effectively by monitoring GPU utilization, memory usage, and network bandwidth. Use tools like NVIDIA System Management Interface (nvidia-smi) for monitoring and managing resources.

Scaling Applications

Scaling applications on a DGX A100 cluster requires careful consideration of:

- Data Distribution: Distribute data across the cluster efficiently using techniques like data partitioning and parallel data loading.

- Model Parallelization: Parallelize the training process across multiple GPUs using techniques like model parallelism and data parallelism.

- Communication Optimization: Optimize communication between nodes and GPUs by using high-speed interconnects and minimizing data transfers.

Concluding Remarks: Nvidia Dgx A100

The NVIDIA DGX A100 system represents a pivotal leap forward in the world of high-performance computing and artificial intelligence. Its capabilities empower researchers, scientists, and engineers to tackle previously unimaginable challenges, driving innovation and progress in diverse fields. From accelerating drug discovery to advancing autonomous driving, the DGX A100 is poised to play a transformative role in shaping the future of technology.

The NVIDIA DGX A100 is a powerful AI system designed for demanding workloads, capable of processing vast amounts of data. While the DGX A100 is a powerhouse, it’s important to ensure secure access to the data and systems it manages, which is where a robust secret server can play a vital role.

A secure server ensures that only authorized personnel can access the sensitive information stored within the DGX A100, maintaining data integrity and protecting against unauthorized access.